Technology > Data Orchestration

Airflow Consulting

“Orchestrate with Confidence.”

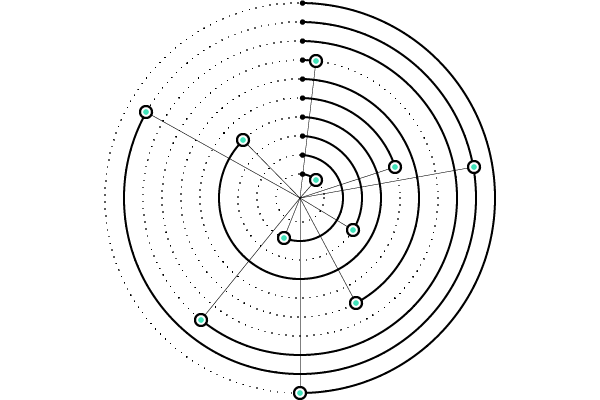

Airflow lets you automate complex workflows with clarity and control. From simple tasks to enterprise-scale pipelines, it keeps your data moving.

Why Use Airflow.

Apache Airflow empowers organisations to orchestrate complex data workflows with precision and clarity. As a code-first platform, it offers unmatched flexibility, enabling teams to define, schedule, and monitor pipelines with full transparency.

Scalable across environments and cloud platforms, Airflow supports the demands of modern data engineering. Its modular design and vibrant open-source community ensure continuous innovation and adaptability. Whether you’re automating daily tasks or managing enterprise-scale data flows, Airflow provides the structure and confidence to deliver with excellence.

Airflow Powers Possibilities

Apache Airflow enables organisations to orchestrate data workflows with precision, transparency, and control. By turning complex processes into manageable, repeatable pipelines, it empowers teams to innovate at scale. Whether you’re automating daily operations or building enterprise-grade data platforms, Airflow provides the structure to move with confidence and agility.

Its open, extensible framework supports continuous evolution ensuring your data strategy grows with your ambitions. With Airflow, possibility becomes progress.

Our Airflow Services

“From Chaos to Coordination.”

Airflow transforms scattered scripts into streamlined, maintainable workflows. Visualise, monitor, and manage every step with ease.

Workflow Orchestration Design

We design scalable, modular workflows using Apache Airflow to orchestrate complex data processes with precision and clarity.

Our approach focuses on building maintainable DAGs (Directed Acyclic Graphs) that automate tasks, manage dependencies, and ensure data flows reliably across systems. Whether you’re integrating multiple data sources, scheduling transformations, or triggering downstream analytics, we tailor each workflow to your business needs. With a focus on performance, reusability, and observability, our orchestration solutions empower teams to move faster, reduce manual effort, and scale with confidence in any cloud or hybrid environment.

Airflow Deployment & Configuration

We specialise in deploying and configuring Apache Airflow across on-premises and cloud environments, including AWS Managed Workflows (MWAA), Google Cloud Composer, and Azure.

Our consultancy ensures each setup is secure, scalable, and tailored to your operational needs. From environment provisioning and DAG scheduling to metadata database tuning and executor selection, we follow best practices to optimise performance and reliability. We also implement role-based access, secrets management, and monitoring tools to support enterprise-grade orchestration. Whether you’re starting fresh or scaling existing workflows, we deliver robust Airflow foundations that grow with your data strategy.

Data Pipeline Development

We build robust, scalable ETL and ELT pipelines using Apache Airflow to streamline data integration, transformation, and delivery across diverse systems.

Our solutions are designed to handle complex dependencies, automate repetitive tasks, and ensure data consistency from source to destination. Whether ingesting data from APIs, databases, or cloud storage, we create modular workflows that are easy to maintain and extend. With a focus on performance, observability, and fault tolerance, our pipelines support real-time and batch processing needs. We help you unlock the full value of your data securely, efficiently, and at scale.

CI/CD for DAGs

We implement robust CI/CD pipelines to streamline the development, testing, and deployment of Apache Airflow DAGs. Using tools like Git, GitHub Actions, GitLab CI, or Azure DevOps, we establish version control, automated testing, and secure deployment workflows. This ensures every change is validated, traceable, and delivered with confidence.

Our approach includes unit testing, linting, DAG validation, and environment-specific deployment strategies. By integrating CI/CD into your data engineering lifecycle, we reduce manual errors, accelerate delivery, and promote collaboration across teams. The result: reliable, production-ready workflows that evolve with your business.

Integration with Data Ecosystems

We connect Apache Airflow with leading tools and platforms such as dbt, Apache Spark, Snowflake, BigQuery, and cloud storage solutions to create seamless, end-to-end data workflows.

Our consultancy ensures that each integration is optimised for performance, reliability, and scalability, enabling your pipelines to orchestrate across diverse technologies with ease. Whether you’re transforming data with dbt, processing at scale with Spark, or loading into cloud data warehouses, we build workflows that are modular, maintainable, and production-ready. With Airflow as the orchestration layer, your entire data ecosystem becomes more connected, automated, and insight-driven.

Performance Optimisation

We optimise Apache Airflow environments to ensure high performance, reliability, and scalability. Our consultancy focuses on tuning key components such as task parallelism, executor configuration, and resource allocation to maximise throughput and minimise latency.

We analyse DAG structure, task dependencies, and scheduling intervals to identify bottlenecks and improve efficiency. Whether you’re running thousands of tasks daily or scaling across teams, we tailor solutions to your workload and infrastructure. With proactive monitoring and fine-tuned settings, we help you achieve faster, more resilient workflows ensuring Airflow performs at its best, even under pressure.

Technologies That Power UsEngines Behind Our Intelligent Solutions

We are powered by a dynamic ecosystem of data and AI technologies that enable precision, agility and innovation. From scalable cloud platforms and modern data lakes to advanced machine learning, generative models and agentic systems, our technical foundation is built for resilience and progress.

These technologies are the engines behind our intelligent solutions, transforming insight into action and strategy into measurable impact.